How do you make delightful discoveries in vast online collections of artworks, objects and specimen?

Now that many galleries, libraries, archives and museums (known collectively as GLAM) have catalogued their collections online, that’s exactly the question curators are asking themselves.

So we set ourselves a challenge, and wondered, “how can we create a fun, enjoyable way of exploring these extensive collections?”

The answer: by making discovery as easy as scribbling on a screen.

We began an in-house R&D experiment to explore a fun way of finding surprise in a digital treasure trove of interesting objects. We combined touch screen drawings with machine learning to create an innovative search experience using digitised doodles.

So when we heard Te Papa were working toward releasing their Collections API, we proposed partnering up to explore the full potential of their extensive dataset.

The Challenge Helping searchers explore vast digital collections

Te Papa’s Collections Online has information on more than 800,000 artworks, objects, and specimens from Te Papa’s collections.

You can typically search the online collection in just one way — by typing into a search box. While this is great if you know what you’re looking for, it doesn’t help if you want to be surprised by obscure artefacts, inspiring art, or precious taonga.

Our Approach Improving the discovery experience

Our developers compared the websites of different museums to get a deeper understanding of the problem we were trying to solve. We discovered that many museums provide their collection online, but they’re difficult to search and often there’s no dedicated way to discover what else they hold. We decided to focus on the discovery experience, and make it as delightful as possible.

After a few brainstorming sessions, we were intrigued by the idea of users actually drawing art themselves. So the next logical step was to use drawing as the way to search.

Training our machine learning model on doodle data

To test if drawings would work as input, we used an open-source dataset from Google called Quick, Draw!. It has millions of user-submitted drawings that developers can use to train new neural networks. Using TensorFlow, we trained an open-source, long short-term memory (LSTM)-based model on the Quick, Draw! dataset. This allows the model to compare drawings against millions of others, recognise those that are similar, and make an educated guess about what you’ve drawn — whether it's a cat, a car, or a Colin McCahon.

Fine-tuning drawing recognition

To improve the accuracy of drawing recognition, we needed to train up our model. The process required several experiments to fine-tune the model’s training parameters.

Over three weeks, we made several optimisations to the modelling code to improve its performance.

After one and a half weeks, we’d developed a Docker and Flask-based back-end application to smoothly handle the drawing inputs.

Sampling drawings accurately

We trained our model on drawings with a particular point resolution — that’s the distance between points in a stroke or drawing. We had to make sure that the input data sent from the front-end was sampled with roughly the same resolution. Otherwise, if the sampling resolution was too high or too low, it would negatively affect the model’s ability to accurately recognise the drawing. So you might draw a dog, but Tuhi thinks you’ve drawn a horse.

We built the front-end app on JavaScript, HTML, and CSS, and used Python for the API. We developed a sampling algorithm that acts as an interface between the front-end app, which produces a stream of points representing a drawing, and the trained model, which recognises the drawing. The sampling algorithm filters out unwanted points in the stream based on their coordinates and time-codes.

Filtering the points creates an optimal resolution — not too high, not too low — for the best prediction accuracy.

Designing a digital canvas

Once we were confident in our initial proof of concept, we approached Te Papa about a possible collaboration based around their newly created Collections API. Our design team quickly created a prototype for Te Papa to show to their stakeholders.

We went with a straightforward and intuitive user interface, designed in-line with Te Papa’s design language system (DLS). It’s a blank digital canvas that beckons visitors to try their hand at sketching.

Making a blank canvas less scary

To help users get an idea of what they could draw from the near endless possibilities, Tuhi gives open-ended sketching prompts, such as something to eat, or a vehicle.

We wanted to encourage users to extend their imaginations — and test Tuhi’s drawing recognition ability — so we were careful not to tell people exactly what to draw.

Simple scribble or masterful sketch

We conducted informal user testing on Te Papa’s museum floor, and found that participants thought their drawings needed to be highly detailed to be recognised.

Thankfully, Tuhi is capable of recognising even the simplest of scribbles.

We even created a few simple example drawings of our own. These display randomly on the screen when Tuhi is idle, and show that even those of us with limited artistic ability have a shot at having our scribbles recognised.

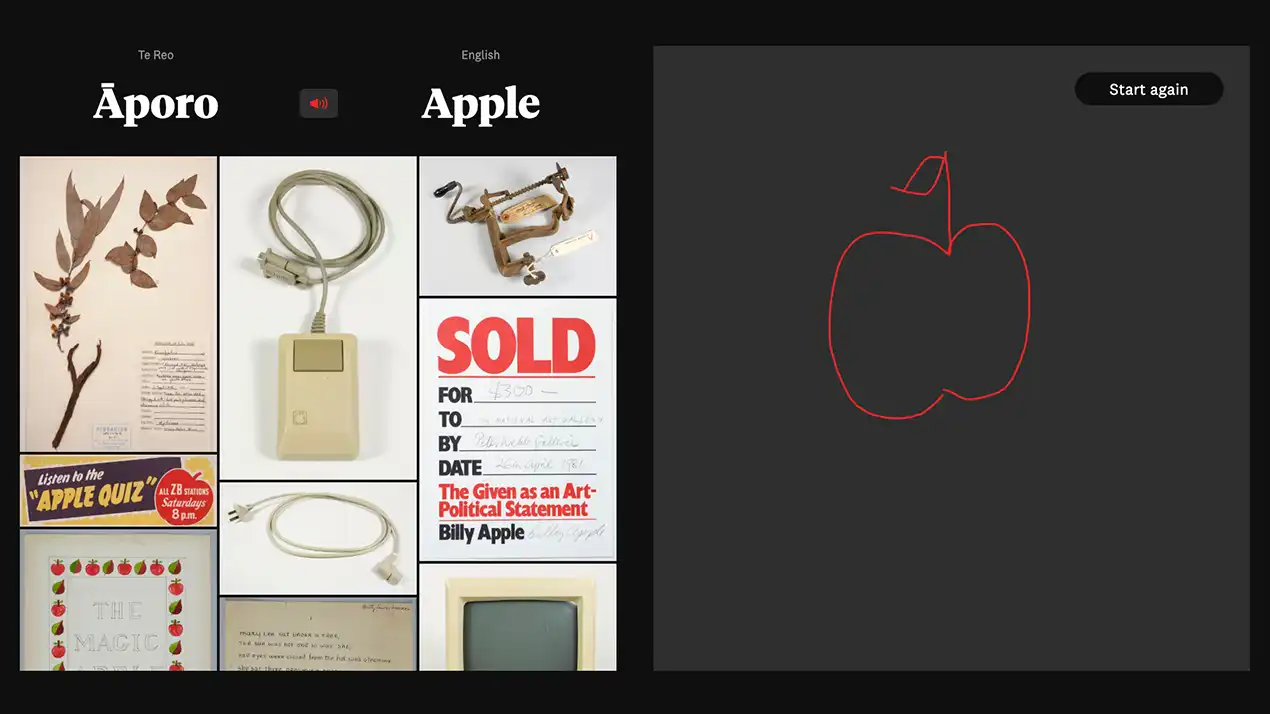

A mosaic of matches

After you finish your drawing, matching items from the collection appear in a mosaic pattern next to the canvas. The layout was inspired by sites with dense imagery like Google Images, masonry layouts like Pinterest, other interactives using Te Papa’s DLS, as well as the Quick, Draw! website itself. Alongside the results, the label for each recognised drawing is given in English and te reo Māori, encouraging you to learn a few new words.

The Result A prototype for future development

Drawing with your finger makes for an engaging and intuitive way of exploring an online collection. People who interact with Tuhi react with a sense of surprise and wonder, and will often try a range of drawings to see what they discover.

You could discover items in these vast collections that you might otherwise have never found — and learn some new words of te reo Māori along the way.

Te Papa showed Tuhi to its stakeholders, who received it with a lot of enthusiasm. As it’s still a prototype, Tuhi hasn’t been released to the public. But with further development, it will ensure a wider use of Te Papa’s Collections API, and greater engagement with their collection online.

Previous

Te Papa — EncountersNext

Collaborate